Losing the Plot: Is Emotionally Adaptive AI Just a Load of Bullsh*t?

Losing the Plot: Is Emotionally Adaptive AI Just a Load of Bullsh*t?

The Kuleshov Effect and the Truth Behind Emotionally Adaptive AI

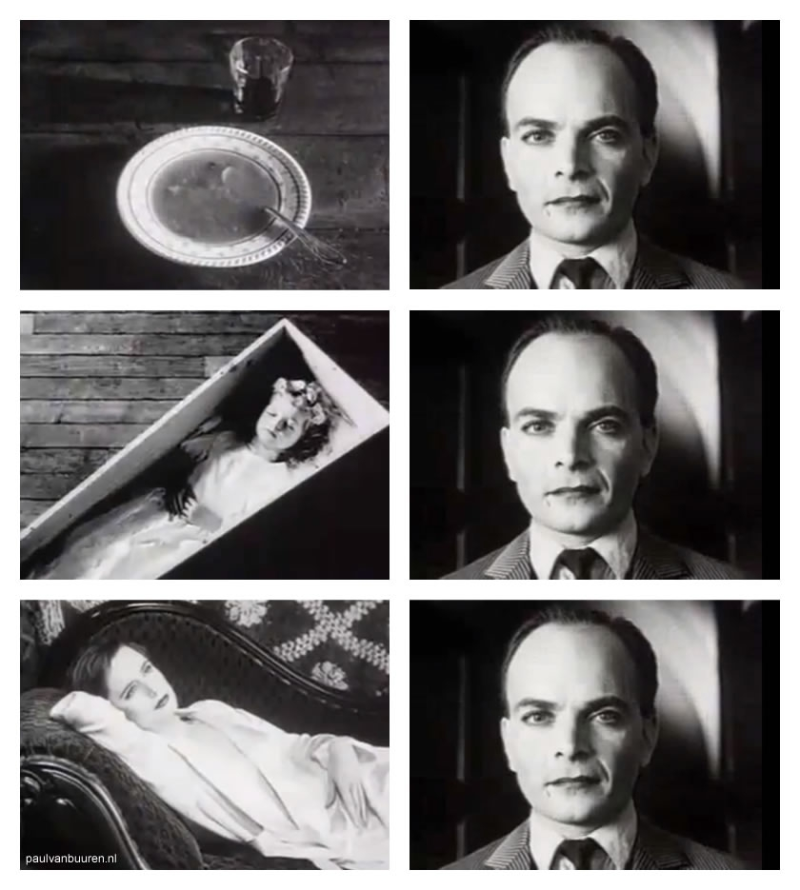

In 1918, Soviet filmmaker Lev Kuleshov conducted an experiment that would reshape how we understand emotion, not just on the silver screen, but on a human-to-human basis. He took a short clip of an actor with a neutral expression and intercut it with three separate images: a bowl of soup, a child in a coffin, and a reclining woman. Audiences swore they saw hunger, grief, and desire in the actor’s face. But the face never changed. What changed was the context. And with it, the emotion people believed they were seeing.

The Kuleshov Effect: Actor Ivan Mozzhukhin’s static expression interspersed

with emotionally charged images

The Kuleshov Effect has since become one of the most cited principles in film theory. But it’s more than just a cinematic trick. It reveals something profound about how humans process emotion: we don’t just react to facial expressions or vocal tones in isolation. We interpret them in relation to everything that’s happening around them. We project intent. We infer backstory. We fill in the gaps. We use context. And if our brains can be “misled” in a darkened cinema by a few silent edits, imagine what happens when an AI system tries to read emotion without any understanding of the scene.

‘Real’-to-Reel: Why AI Misreads the Script

It’s no surprise that many people remain skeptical about emotionally-adaptive AI. For years, the field was dominated by systems that claimed to read human feeling - the same way you’d scan a barcode. One input, one output. A raised eyebrow meant surprise. A furrowed brow meant anger. A smile meant happiness, regardless of whether it was nervous, ironic, or hollow. These early models promised precision but delivered little more than a very convincing caricature.

They took something fluid and deeply contextual like human emotion and flattened it into static labels hoping to get it right more than it got it wrong. In most cases it succeeded, but it was a fluke – and people saw right through it. Even if what they were seeing seemed real, they viewed it with the same healthy dose of skepticism they might view a magic trick, looking for the “tells” and trying to unravel it. Many experts publicly stated that AI’s ability to read emotion was, to use their language, “bullshit.”

So critics of AI that claimed to be “emotionally in tune” quite sensibly pushed back. It’s a step too far; a rubicon that AI would never cross. But the problem wasn’t the ambition, it was the framing. Emotion is more than a fixed expression waiting to be decoded – it’s a dynamic signal that only makes sense within a broader narrative. We don’t cry just because. We cry because we’ve been carrying something heavy for too long. Or because someone surprised us with kindness. Or because we laughed too hard and it caught us off guard. Perhaps we’re just very empathetic and feel things very deeply. The expression doesn’t tell the full story. What matters is everything else around it.

Emotion is Context

The Kuleshov Effect is a timely reminder of how meaning is constructed. It doesn’t disprove emotionally-adaptive AI; it just confirms that previous iterations lacked one incredibly important detail: context. At Neurologyca, we see the Kuleshov Effect as a blueprint. Context isn’t optional. It’s the starting point.

For too long, emotion AI has treated human feeling like a classification problem, as if the goal were to pigeonhole people into tidy boxes marked happy, sad, or angry. But what use is that? Real emotional experience overlaps, evolves, and contradicts itself all the time. You can be confident and anxious at the same time. You can smile while grieving. You can feel a surge of energy moments before burning out. Like music, the meaning of our emotions depends on rhythm, harmony, and tone. We might hear a C7 note and think it’s melancholic, but one note does not reveal the feeling behind a song. We need to hear those notes together – how they relate, shift, and resolve over time.

This is the shift we’ve made at Neurologyca: from labeling expressions to interpreting patterns. We don’t ask “what’s the face doing?” – we ask “what’s changing, and why?” Someone’s attention might be drifting during a training session – but is that because they’re bored or overwhelmed?

If the early wave of emotion AI tried to work like a mirror, reflecting facial expressions back as emotional categories, today’s AI technology works more like a lens. We’re not interested in surface-level readings. We’re interested in what lies beneath them: the tension between how someone appears and what they’re experiencing. To get there, we combine multiple layers of analysis that unfold in real time – not as guesses, but as grounded, evidence-based adaptive interpretations of human behavior.

Bringing a Human Context Layer to AI

First, we read signals across modalities. Yes, facial micro-expressions, but also eye movement, posture, vocal tone, blink rate – the small physiological shifts that hint at rising mental effort, declining confidence, or subtle stress. These aren’t treated as standalone cues. Instead, we analyze them against contextual baselines such as how a person might typically behave in a given environment, and how their signals evolve moment to moment. Then we ask: what is this person trying to do? Are they explaining something? Making a decision? Processing information? We map intent as part of the emotional equation, because meaning depends on purpose. A furrowed brow might suggest confusion while listening, but focus while problem-solving. Same face, different story.

Next, we model human factors that reflect deeper internal states. Things like cognitive load, mental fatigue, emotional engagement, or readiness to act. These are not fixed “emotions” to be labeled, but dynamic indicators that help the system adapt. These insights can be anonymously “crowd-sourced” from a broad userbase, or learned from an individual to create an accurate personality baseline.

Crucially, all of this can happen on-device, and because our platform uses point-based tracking instead of photographic input – tracking the contours and changes of facial features rather than analyzing the face itself – the identity of users remains protected. Without any wearables or peripheral devices, a user can simply sit down at any camera-enabled device and let the software do the work. There are no stored images, no need for cloud processing, no intrusive hardware. Just an awareness layer that can help systems like Large Language Models become more attuned to their human users.

We’re not trying to teach machines how to feel. We’re teaching them how to recognize how the human on the other side of the screen is feeling.

The Kuleshov Effect showed us that meaning doesn’t live in the face, but lives in the frame. Emotion AI that ignores context deserves skepticism because it’s fundamentally misreading the story, and if it does get something right, it just got lucky. But, times change. And technology evolves and advances. Our R&D teams are working hard on creating the next generation of truly adaptive AI – systems that don't just read the screen, but read the scene.

%203.png)